Amazon S3 File Upload Api Calls

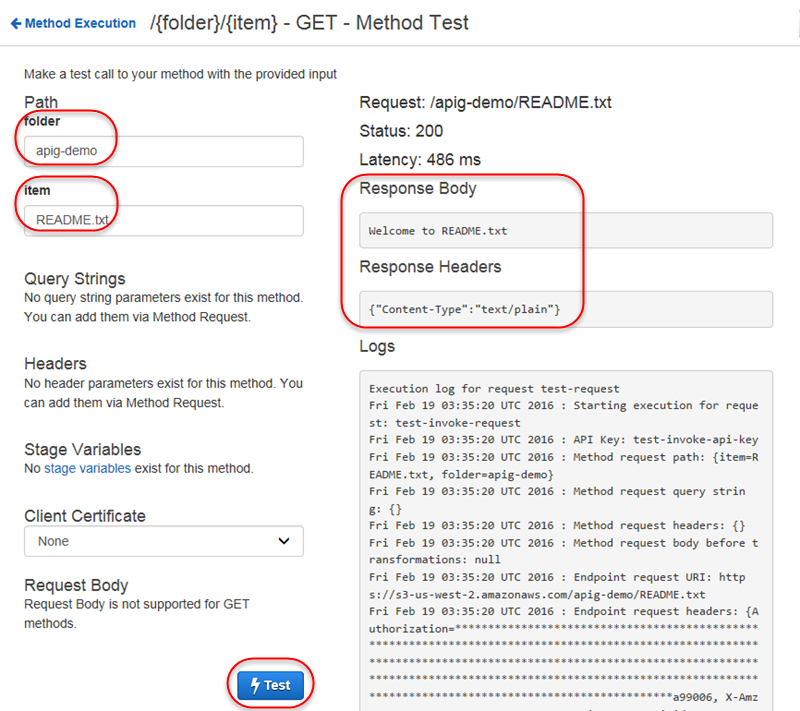

Practical tutorial to create a small REST API to upload files to Amazon S3 in Golang. Perfect for a microservice. Upload objects to Amazon S3 in a single operation for objects up to 5 GB in size with the REST API. Upload an Object Using the REST API. You can use AWS SDK to upload an object. However, if your application requires it, you can send REST requests directly. You can send a PUT request to upload data in a single.

Ultimate Goal: Upload large video files (<200MB-3GB) from a content producer's computer to an AWS S3 bucket to use the Elastic Transcoder service.

- The content producer will be a pro user, so a little extra work on their part is not a huge burden. However, keeping it as simple as possible for them (and me) is ideal. Would be best if a web form could be used to initiate.

- There wouldn't be many hundreds of content producers, so some extra time or effort could be devoted to setting up some sort of account or process for each individual content producer. Although automation is king.

- Some said you could use some sort of Java Applet or maybe Silverlight.

- One thing I thought of was using SFTP to upload first to EC2 then it would be moved to S3 afterwards. But it kind of sounds like a pain making it secure.

- After some research I discovered S3 allows cross-origin resource sharing. So this could allow uploading directly to S3. However, how stable would this be with huge files?

- Looks like S3 allows multipart uploading as well.

Any ideas?

John Rotenstein3 Answers

You could implement the front-end in pretty much anything that you can code to speak native S3 multipart upload.. which is the approach I'd recommend for this, because of stability.

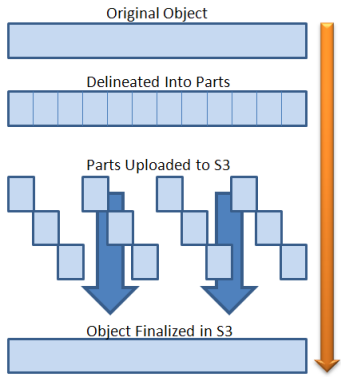

With a multipart upload, 'you' (meaning the developer, not the end user, I would suggest) choose a part size, minimum 5MB per part, and the file can be no larger that 10,000 'parts', each exactly the same size (the one 'you' selected at the beginning of the upload, except for the last part, which would be however many bytes are left over at the end.. so the ultimatel maximum size of the uploaded file depends on the part-size you choose.

The size of a 'part' essentially becomes your restartable/retryable block size (win!).. so your front-end implementation can infinitely resend a failed part until it goes through correctly. Parts don't even have to be uploaded in order, they can be uploaded in parallel, and if you upload the same part more than once, the newer one replaces the older one, and with each block, S3 returns a checksum that you compare to your locally calculated one. The object doesn't become visible in S3 until you finalize the upload. When you finalize the upload, if S3 hasn't got all the parts (which is should, because they were all acknowledged when they uploaded) then the finalize call will fail.

The one thing you do have to keep in mind, though, is that multipart uploads apparently never time out, and if they are 'never' either finalized/completed nor actively aborted by the client utility, you will pay for the storage of the uploaded blocks of the incomplete uploads. So, you want to implement an automated back-end process that periodically calls ListMultipartUploads to identify and abort those uploads that for whatever reason were never finished or canceled, and abort them.

I don't know how helpful this is as an answer to your overall question, but developing a custom front-end tool should not be a complicated matter -- the S3 API is very straightforward. I can say this, because I developed a utility to do this (for my internal use -- this isn't a product plug). I may one day release it as open source, but it likely wouldn't suit your needs anyway -- its essentially a command-line utility that can be used by automated/scheduled processes to stream ('pipe') the output of a program directly into S3 as a series of multipart parts (the files are large, so my default part-size is 64MB), and when the input stream is closed by the program generating the output, it detects this and finalizes the upload. :) I use it to stream live database backups, passed through a compression program, directly into S3 as they are generated, without ever needing those massive files to exist anywhere on any hard drive.

Your desire to have a smooth experience for your clients, in my opinion, highly commends S3 multipart for the role, and if you know how to code in anything that can generate a desktop or browser-based UI, can read local desktop filesystems, and has libraries for HTTP and SHA/HMAC, then you can write a client to do this that looks and feels exactly the way you need it to.

You wouldn't need to set up anything manually in AWS for each client, so long as you have a back-end system that authenticates the client utility to you, perhaps by a username and password sent over an SSL connection to an application on a web server, and then provides the client utility with automatically-generated temporary AWS credentials that the client utility can use to do the uploading.

Michael - sqlbotMichael - sqlbotSomething like S3Browser would work. It has a GUI, a command line and works with large files. You can use IAM to create a group, grant that group access to a specific S3 bucket using a policy, then add IAM users to that group.

Your IAM group policy would look something like this :

Adding an IAM user to this group will allow them to use S3Browser and only have read-write access to YOUR_BUCKET_NAME. However, they will see a list of your other buckets, just not be able to read/write to them. You'll also need to generate an AWS Access Key and Secret for each IAM user and provide those 2 items to whoever is using S3Browser.

You can use Minio client 'mc'.

You can mirror local folder to S3 bucket with simple command. Adding this on cron can automate this sync from local to remote S3 buck.

Alternatively you can check minio-java library.

Amazon S3 File Upload Api Calls To Iphone

PS: I contribute to the project & would love to get your valuable feedback & contribution.

koolhead17koolhead17

Not the answer you're looking for? Browse other questions tagged amazon-web-servicesamazon-s3amazon-ec2uploadamazon-elastic-transcoder or ask your own question.

Amazon S3 is integrated with AWS CloudTrail, a service that provides a record of actions taken by a user, role, or an AWS service in Amazon S3. CloudTrail captures a subset of API calls for Amazon S3 as events, including calls from the Amazon S3 console and from code calls to the Amazon S3 APIs. If you create a trail, you can enable continuous delivery of CloudTrail events to an Amazon S3 bucket, including events for Amazon S3. If you don't configure a trail, you can still view the most recent events in the CloudTrail console in Event history. Using the information collected by CloudTrail, you can determine the request that was made to Amazon S3, the IP address from which the request was made, who made the request, when it was made, and additional details.

Amazon S3 Upload Api

To learn more about CloudTrail, including how to configure and enable it, see the AWS CloudTrail User Guide.

Amazon S3 Information in CloudTrail

CloudTrail is enabled on your AWS account when you create the account. When supported event activity occurs in Amazon S3, that activity is recorded in a CloudTrail event along with other AWS service events in Event history. You can view, search, and download recent events in your AWS account. For more information, see Viewing Events with CloudTrail Event History.

For an ongoing record of events in your AWS account, including events for Amazon S3, create a trail. A trail enables CloudTrail to deliver log files to an Amazon S3 bucket. By default, when you create a trail in the console, the trail applies to all Regions. The trail logs events from all Regions in the AWS partition and delivers the log files to the Amazon S3 bucket that you specify. Additionally, you can configure other AWS services to further analyze and act upon the event data collected in CloudTrail logs. For more information, see the following:

Receiving CloudTrail Log Files from Multiple Regions and Receiving CloudTrail Log Files from Multiple Accounts

Every event or log entry contains information about who generated the request. The identity information helps you determine the following:

Whether the request was made with root or IAM user credentials.

Whether the request was made with temporary security credentials for a role or federated user.

Whether the request was made by another AWS service.

For more information, see the CloudTrail userIdentity Element. Activebarcode crack.

You can store your log files in your bucket for as long as you want, but you can also define Amazon S3 lifecycle rules to archive or delete log files automatically. By default, your log files are encrypted by using Amazon S3 server-side encryption (SSE).

Amazon S3 Bucket-Level Actions Tracked by CloudTrail Logging

By default, CloudTrail logs bucket-level actions. Amazon S3 records are written together with other AWS service records in a log file. CloudTrail determines when to create and write to a new file based on a time period and file size.

The tables in this section list the Amazon S3 bucket-level actions that are supported for logging by CloudTrail.

Amazon S3 Bucket-Level Actions Tracked by CloudTrail Logging

| REST API Name | API Event Name Used in CloudTrail Log |

|---|---|

| DeleteBucket | |

| DeleteBucketCors | |

| DeleteBucketEncryption | |

| DeleteBucketLifecycle | |

| DeleteBucketPolicy | |

| DeleteBucketReplication | |

| DeleteBucketTagging | |

| DeleteBucketWebsite | |

| GetBucketAcl | |

| GetBucketCors | |

| GetBucketEncryption | |

| GetBucketLifecycle | |

| GetBucketLocation | |

| GetBucketLogging | |

| GetBucketNotification | |

| GetBucketPolicy | |

| GetBucketReplication | |

| GetBucketRequestPay | |

| GetBucketTagging | |

| GetBucketVersioning | |

| GetBucketWebsite | |

| ListBuckets | |

| CreateBucket | |

| PutBucketAcl | |

| PutBucketCors | |

| PutBucketEncryption | |

| PutBucketLifecycle | |

| PutBucketLogging | |

| PutBucketNotification | |

| PutBucketPolicy | |

| PutBucketReplication | |

| PutBucketRequestPay | |

| PutBucketTagging | |

| PutBucketVersioning | |

| PutBucketWebsite |

In addition to these API operations, you can also use the OPTIONS object object-level action. This action is treated like a bucket-level action in CloudTrail logging because the action checks the cors configuration of a bucket.

Amazon S3 Object-Level Actions Tracked by CloudTrail Logging

You can also get CloudTrail logs for object-level Amazon S3 actions. To do this, specify the Amazon S3 object for your trail. When an object-level action occurs in your account, CloudTrail evaluates your trail settings. If the event matches the object that you specified in a trail, the event is logged. For more information, see How Do I Enable Object-Level Logging for an S3 Bucket with AWS CloudTrail Data Events? in the Amazon Simple Storage Service Console User Guide and Data Events in the AWS CloudTrail User Guide. The following table lists the object-level actions that CloudTrail can log:

| REST API Name | API Event Name Used in CloudTrail Log |

|---|---|

| AbortMultipartUpload | |

| CompleteMultipartUpload | |

| DeleteObjects | |

| DeleteObject | |

| GetObject | |

| GetObjectAcl | |

| GetObjectTagging | |

| GetObjectTorrent | |

| HeadObject | |

| CreateMultipartUpload | |

| ListParts | |

| PostObject | |

| RestoreObject | |

| PutObject | |

| PutObjectAcl | |

| PutObjectTagging | |

| CopyObject | |

| SelectObjectContent | |

| UploadPart | |

| UploadPartCopy |

In addition to these operations, you can use the following bucket-level operations to get CloudTrail logs as object-level Amazon S3 actions under certain conditions:

GET Bucket (List Objects) Version 2 – Select a prefix specified in the trail.

GET Bucket Object versions – Select a prefix specified in the trail.

HEAD Bucket – Specify a bucket and an empty prefix.

Delete Multiple Objects – Specify a bucket and an empty prefix.

Note

CloudTrail does not log key names for the keys that are deleted using the Delete Multiple Objects operation.

Object-Level Actions in Cross-Account Scenarios

The following are special use cases involving the object-level API calls in cross-account scenarios and how CloudTrail logs are reported. CloudTrail always delivers logs to the requester (who made the API call). When setting up cross-account access, consider the examples in this section.

Note

The examples assume that CloudTrail logs are appropriately configured.

Example 1: CloudTrail Delivers Access Logs to the Bucket Owner

CloudTrail delivers access logs to the bucket owner only if the bucket owner has permissions for the same object API. Consider the following cross-account scenario:

Account-A owns the bucket.

Account-B (the requester) tries to access an object in that bucket.

CloudTrail always delivers object-level API access logs to the requester. In addition, CloudTrail also delivers the same logs to the bucket owner only if the bucket owner has permissions for the same API actions on that object.

Note

If the bucket owner is also the object owner, the bucket owner gets the object access logs. Otherwise, the bucket owner must get permissions, through the object ACL, for the same object API to get the same object-access API logs.

Example 2: CloudTrail Does Not Proliferate Email Addresses Used in Setting Object ACLs

Consider the following cross-account scenario:

Account-A owns the bucket.

Account-B (the requester) sends a request to set an object ACL grant using an email address. For information about ACLs, see Access Control List (ACL) Overview.

The request gets the logs along with the email information. However, the bucket owner—if they are eligible to receive logs, as in example 1—gets the CloudTrail log reporting the event. However, the bucket owner doesn't get the ACL configuration information, specifically the grantee email and the grant. The only information that the log tells the bucket owner is that an ACL API call was made by Account-B.

CloudTrail Tracking with Amazon S3 SOAP API Calls

CloudTrail tracks Amazon S3 SOAP API calls. Amazon S3 SOAP support over HTTP is deprecated, but it is still available over HTTPS. For more information about Amazon S3 SOAP support, see Appendix A: Using the SOAP API.

Important

Newer Amazon S3 features are not supported for SOAP. We recommend that you use either the REST API or the AWS SDKs.

Amazon S3 SOAP Actions Tracked by CloudTrail Logging

| SOAP API Name | API Event Name Used in CloudTrail Log |

|---|---|

| ListBuckets | |

| CreateBucket | |

| DeleteBucket | |

| GetBucketAcl | |

| PutBucketAcl | |

| GetBucketLogging | |

| PutBucketLogging |

Amazon S3 Upload Failed

Using CloudTrail Logs with Amazon S3 Server Access Logs and CloudWatch Logs

AWS CloudTrail logs provide a record of actions taken by a user, role, or an AWS service in Amazon S3, while Amazon S3 server access logs provides detailed records for the requests that are made to an S3 bucket. For more information on how the different logs work and their properties, performance and costs, see Logging with Amazon S3. You can use AWS CloudTrail logs together with server access logs for Amazon S3. CloudTrail logs provide you with detailed API tracking for Amazon S3 bucket-level and object-level operations. Server access logs for Amazon S3 provide you visibility into object-level operations on your data in Amazon S3. For more information about server access logs, see Amazon S3 Server Access Logging.

You can also use CloudTrail logs together with CloudWatch for Amazon S3. CloudTrail integration with CloudWatch Logs delivers S3 bucket-level API activity captured by CloudTrail to a CloudWatch log stream in the CloudWatch log group that you specify. You can create CloudWatch alarms for monitoring specific API activity and receive email notifications when the specific API activity occurs. For more information about CloudWatch alarms for monitoring specific API activity, see the AWS CloudTrail User Guide. For more information about using CloudWatch with Amazon S3, see Monitoring Metrics with Amazon CloudWatch.

Example: Amazon S3 Log File Entries

A trail is a configuration that enables delivery of events as log files to an Amazon S3 bucket that you specify. CloudTrail log files contain one or more log entries. An event represents a single request from any source. It includes information about the requested action, the date and time of the action, request parameters, and so on. CloudTrail log files are not an ordered stack trace of the public API calls, so they do not appear in any specific order.

The following example shows a CloudTrail log entry that demonstrates the GET Service, PUT Bucket acl, and GET Bucket versioning actions.